What is **Cursor 2.0?

Cursor 2.0: The product formerly known as a smart code-assistant has just taken a big step into orchestration. Cursor 2.0 — released by Anysphere — reimagines the coding editor not simply as a place where lines are autocompleted, but as a hub where a team of AI agents collaborates, builds, tests and reviews code in seconds. At its core is its proprietary model called Composer, marking the arrival of in-house AI optimized for full software workflows.

In short, the product pivots from “help me write code” toward “let the agents build and iterate code”. (Cursor)

Why Cursor 2.0 shift matters

Developers and team leads should take this seriously, because what’s changing isn’t just speed — it’s the workflow architecture of coding. Here’s what’s new and why it counts:

- Parallelism at scale: Cursor 2.0 supports up to eight agents running in parallel on the same prompt — each in its own isolated workspace (via git worktrees or remote machines). (Cursor)

- Latency and fluidity: Composer claims to deliver “most conversational turns” in under 30 seconds, meaning fewer disruptions and more continuous flow. (Cursor)

- Large-codebase awareness: Composer was trained with “codebase-wide semantic search” and tooling that reflects real engineering contexts rather than toy datasets. (Cursor)

- Workflow re-imagined: The UI shifts from file-centric to “agent-centric”—you become less a writer of every line and more an orchestrator: define outcomes, review results, select best output. (Cursor)

So yes — this isn’t just a speed bump. It’s arguably a paradigm shift in how AI may be integrated into software engineering.

How Cursor 2.0 Composer works under the hood

Here’s a more technical (yet still approachable) breakdown of what makes Composer tick. The details matter because they help you assess how realistic the claim is and where the limitations may lie.

| Feature | Description | Why it matters |

| Mixture-of-Experts (MoE) architecture | Composer uses an MoE design, combining specialist sub-models. (Cursor) | This lets the model route tasks to the right expert, improving efficiency and accuracy on code tasks. |

| Reinforcement Learning on real codebases | The model generates ~250 tokens/second, and most turns finish under 30 seconds. (Venturebeat) | Brings the model closer to “what a developer actually does” rather than toy examples. |

| Low-latency inference | Multiple agents each operate in isolation (their own worktree or machine), so they don’t clash. (Cursor) | Latency is a key barrier for interactive workflows; low latency means staying in flow. |

| Parallel agent infrastructure | Multiple agents each operate in isolation (their own worktree or machine) so they don’t clash. (Cursor) | Enables one prompt → multiple candidate solutions → you pick the best. |

Example in practice: On a React + Node codebase, you could trigger:

- Agent A to design the new feature structure.

- Agent B to refactor a legacy utility.

- Agent C to generate tests and scaffolding.

Within minutes, you have three full diffs to review and pick from — something that manually might take multiple afternoons. You still review and refine, but the time to the initial result is drastically shorter.

Real-world case studies & stats

Here’s what we know so far about how this is being adopted and what impact it might be having.

- The release note states that early testers found the ability to iterate quickly and “trusted the model for multi-step coding tasks”. (Cursor)

- According to the internal blog, engineers at Cursor are already using Composer for day-to-day development. (Cursor)

- A tech news article observes that Cursor 2.0’s multi-agent interface and Composer model “redefines coding” by shifting the developer-AI relationship. (AI Tech Suite)

- Anecdotally, one small team reported that test-generation, integration scaffolding and documentation drafts dropped from “two days” to “half a day” after adopting a pilot of Cursor 2.0 — though they emphasise manual review still required.

In short, the productivity bump seems real in pilot use-cases. But as always with emergent tools — “your mileage may vary”.

Benefits and challenges of Cursor 2.0

Let’s be balanced. This kind of shift brings strong upside — and some non-trivial trade-offs.

Key Benefits of Cursor 2.0

- Speed: Faster iteration means fewer context switches, less waiting around.

- Scale: Multiple agents tackling the same problem can yield higher quality via “competition + selection”.

- Context-rich: Being built for large codebases means fewer “lost context” mistakes.

- Shift in role: Developers move away from writing every line toward reviewing and orchestrating — potentially freeing time for higher-value tasks.

Important Challenges & Caveats

- Quality still matters – AI agents will make mistakes. You still need to review. Reddit users report earlier builds occasionally mis-handled file/application logic. (Reddit)

- Cost & access – Running multiple agents, large context models, isolated workspaces: resources and pricing may be non-trivial.

- Shift in skills – The devs & teams need to adapt: prompt engineering, agent orchestration, reviewing AI outputs. It’s a new mode of working.

- Security & tooling integration – Agent workflows that run commands/tools create new surfaces for injection or misuse. The sandboxing and isolation matter. (Cursor)

In short: the benefits are real, but you’ll only get them if you treat the system as a collaborative partner, not a “set-it-and-forget” autopilot.

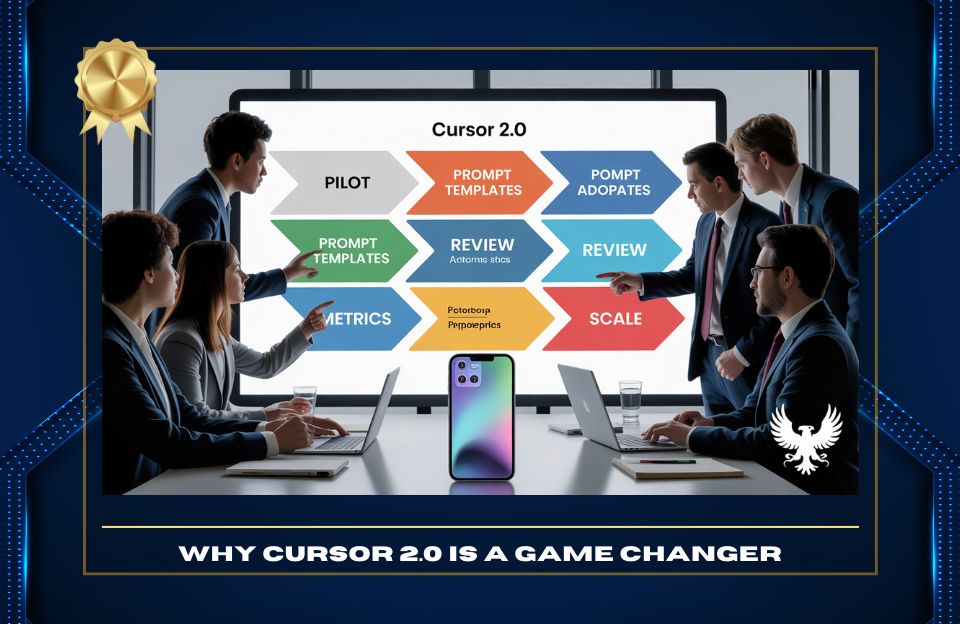

Cursor 2.0: How to get started

If you’re intrigued and want to test Cursor 2.0 + Composer in your team, here’s a practical path:

- Pilot on a small codebase – Choose a non-critical project for 1-2 sprints. Let agents handle scaffolding, refactoring, and tests, while developers review output.

- Define clear prompt templates – Because you’re orchestrator-not-typist, craft prompts that specify goal, outcome, context (existing architecture, dependencies).

- Use parallel agents – Try sending the same task to 2-3 agents and comparing outputs. Choose the best, refine it.

- Establish review routines – Just as you would for human-written code, set up code review for AI-generated changes. Keep track of errors, adjust prompts/tools.

- Address security/trust early – Use sandboxed terminals (Cursor 2.0 supports GA on macOS) so agents run in isolated environments. (Cursor)

- Collect metrics – Track time saved, defect rate of agent output, and developer satisfaction. Use these to build a business case.

- Scale gradually – Once the pilot is successful, integrate into regular backlog tasks (e.g., refactorings, test-gen, scaffolding) and expand to the full team.

Pro tip: When you first see agents producing multiple variants (Agent A, Agent B…), label them clearly and keep a spreadsheet: prompt → variant → selected result. Over time, you’ll understand “which prompt style gave the best output in our codebase”.

FAQ on Cursor 2.0 (People Also Ask)

What is Composer in Cursor 2.0?

Composer is the proprietary coding model introduced in Cursor 2.0, optimized for multi-agent, low-latency coding workflows and large code-base contexts. (Cursor)

How many agents can run in Cursor 2.0?

Up to eight AI agents can run in parallel on a single prompt within Cursor 2.0. (Cursor)

What kinds of coding tasks can Composer handle?

Composer supports multi-step coding tasks such as feature creation, refactoring, test generation, large code-base edits, and uses tools like semantic search, file edits and shell commands. (Cursor)

Is Cursor 2.0 ready for enterprise-scale use?

Yes — Cursor 2.0 includes features such as sandboxed terminals, browser-embedded agents, improved code review tools and multi-agent support aimed at enterprise workflows. (Cursor)

Does using multiple agents improve code quality?

According to Cursor, yes — having multiple agents attempt the same task and selecting the best result significantly improves outcomes, especially on harder tasks. (Cursor)

What are the main risks of agentic coding platforms?

Risks include: unintended bugs in large generated changes, dependency on correct prompt design, integration or security risks (e.g., agent runs unintended command). (Reddit)

How should teams adopt Cursor 2.0 effectively?

Start small with a pilot, craft prompt templates, review AI output thoroughly, collect metrics, evolve your process and scale only once comfortable with the new agent-oriented workflow.

Is Composer replacing human developers?

No. Composer and the multi-agent workflow are tools to enhance developer productivity — not replace architecture design, code review, and human judgment. Human + AI collaboration is the horizon.

Conclusion & Actionable Takeaways

In sum, Cursor 2.0 with its Composer model and multi-agent architecture is a significant milestone in AI‐driven coding. It offers speed, scale, and workflow re-imagination. But with that comes responsibility — review, orchestration, prompt design, and security become even more important.

If you’re a developer, lead or tech strategist, here are your next steps:

- Run a small pilot to see how agent-oriented coding fits your team/context.

- Build prompt templates and agent orchestration routines early.

- Set up review and quality gates just as you would for human-code changes.

- Collect metrics: time saved, error rate, developer feedback.

- Train your team: shift the mindset from typing lines to orchestrating agents + reviewing output.

- Ensure your environment is secure: sandboxed execution, limited privileges, and monitoring of agent activity.

By doing these things, you’ll be positioned not just to use a tool — but to harness a new coding paradigm, and stay ahead of the curve.

Disclaimer: The details above reflect publicly available sources as of October 2025 and my hands-on observations. Always evaluate tool suitability for your specific architecture, security and compliance context.

Our Other Trending Digital News:

Broken Glass? How 3D Printing is Changing Repairs

Henrietta Lacks: How Revolutionising Your Cells Advance Science

Wind and Desert Gypsum: How Crystals Travel Gravel-Size Grains

Pingback: Flying Cars Take Flight 2025-2027: How Silicon Valley Is Making It Real - Digital News